- Tuesday, May 31

-

- 8:00AM - Noon:

-

- Charm++ (Santa Rita Room)

- POET (Rincon Room)

- WolfHPC (Executive Boardroom)

- WEST (Coronado Room)

- ROSS (Salon F)

- 1:30PM - 5:00p:

-

- Infiniband and HSE (Santa Rita Room)

- WolfHPC (Executive Boardroom)

- WEST (Coronado Room)

- ROSS (Salon F)

- Wednesday, June 1

-

- 8:30AM - 10:00AM: Opening Plenary and opening remarks. Chair: David K. Lowenthal

- Speakers: Sally A. McKee and Bronis R. de Supinski

- Keynote I Rethinking Shared-Memory Languages and Hardware (Sarita Adve)

- 10:30AM - 12:00PM (Noon): Plenary Session: "Best Paper Candidates" (Papers 1) Chair: Bronis R. de Supinski, sponsored by Nvidia

- An Execution Strategy and Optimized Runtime Support for Parallelizing Irregular Reductions on Modern GPUs Xin Huo, Vignesh Ravi, Wenjing Ma and Gagan Agrawal.

- Automatic Generation of Communication Specifications from Parallel Applications Xing Wu, Frank Mueller and Scott Pakin.

- Hystor: Making the Best Use of Solid State Drives in High Performance Storage Systems Feng Chen, David Koufaty and Xiaodong Zhang.

- 12:00PM - 1:30PM: Lunch, sponsored by Microsoft

- 12:00PM - 1:30PM: SRC Posters Presentation

- 1:30PM - 3:00PM: Plenary Session: "Transactional Memory" (Papers 2) Chair: Yang Ni

- Transactional Conflict Decoupling and Value Prediction Fuad Tabba, Andrew Hay and James Goodman.

- Multiset Signatures for Transactional Memory Ricardo Quislant, Eladio Gutirrez, Oscar Plata and Emilio L. Zapata.

- ZEBRA : A Data-Centric, Hybrid-Policy Hardware Transactional Memory Design Ruben Titos-Gil, Anurag Negi, Manuel E. Acacio, Jos M. Garca and Per Stenstrom.

- 3:30PM - 4:30PM: Parallel Session: "Software Tools" (Papers 3a) Chair: Todd Gamblin

- Scalable Fine-grained Call Path Tracing Nathan Tallent, John Mellor-Crummey, Michael Franco, Reed Landrum and Laksono Adhianto.

- Generic Topology Mapping Strategies for Large-scale Parallel Architectures Torsten Hoefler and Marc Snir.

- 3:30PM - 4:30PM: Parallel Session: "Non-volatile Memory Systems" (Papers 3b) Chair: Barry Rountree

- Page Placement in Hybrid Memory Systems Luiz Ramos, Eugene Gorbatov and Ricardo Bianchini.

- Performance Impact and Interplay of SSD Parallelism through Advanced Commands, Allocation Strategy and Data Granularity Yang Hu, Hong Jiang, Lei Tian, Hao Luo and Dan Feng.

- 5:00PM - 6:00PM: Parallel Session: "Novel Hardware/Software Approaches" (Papers 4a) Chair: Torsten Hoefler

- SecureME: A Hardware-Software Approach to Full System Security Siddhartha Chhabra and Yan Solihin.

- Processing data streams with hard real-time constraints on heterogeneous systems Uri Verner, Assaf Schuster and Mark Silberstein.

- 5:00PM - 6:00PM: Parallel Session: "Power" (Papers 4b) Chair: Mohamed Zahran

- Coordinating Processor and Main Memory for Efficient Server Power Control Ming Chen, Xiaorui Wang and Xue Li.

- Optimizing Throughput/Power Tradeoffs in Hardware Transactional Memory Using DVFS and Intelligent Scheduling Clay Hughes and Tao Li.

- 7:00PM - 9:00PM: Posters Reception, sponsored by AMD

- Thursday, June 2

- 9:00AM - 10:00AM: Plenary session. Chair: Bronis R. de Supinski.

-

- Keynote II Challenges and Opportunities in Renewable Energy and Energy Efficiency (Steve Hammond)

- 10:30AM - 12:00PM (Noon): Plenary Session: "Performance and Resilience for Solver Algorithms" (Papers 5) Chair: James Dehnert

- Characterizing the Impact of Soft Errors on Iterative Methods in Scientific Computing Manu Shantharam, Sowmyalatha Srinivasmurthy and Padma Raghavan.

- High Performance Linpack Benchmark: A Fault Tolerant Implementation without Checkpointing Teresa Davies, Christer Karlsson, Hui Liu, Chong Ding and Zizhong Chen.

- Modeling the Performance of an Algebraic Multigrid Cycle on HPC Platforms Hormozd Gahvari, Allison Baker, Martin Schulz, Ulrike Yang, Kirk Jordan and William Gropp.

- 12:00PM - 1:30PM: Lunch, sponsored by Isilon

- 1:30PM - 3:00PM: Parallel Session: "Model-Based Techniques" (Papers 6) Chair: Benjamin C. Lee

- Optimizing the Datacenter for Data-Centric Workloads Stijn Polfliet, Frederick Ryckbosch and Lieven Eeckhout.

- Predictive Coordination of Multiple On-Chip Resources for Chip Multiprocessors Jian Chen and Lizy John.

- An Idiom-finding Tool for Increasing Productivity of Accelerators Laura Carrington, Mustafa Tikir, Cathie Olschanowsky, Michael Laurenzano, Joshua Pereza, Allan Snavely and Stephen Poole.

- 1:30PM - 3:00PM: Parallel Session: SRC Finalist Presentations. Chair: Shan Lu

- 3:30PM - 10:00PM: Social Event and Banquet, sponsored by Intel

- Friday, June 3

- 8:30AM - 10:00AM: Plenary session and awards presentations. Chair: Sally A. McKee

-

- Keynote III Performance Modeling as the Key to Extreme Scale Computing (William Gropp) followed by awards presentations (Best Paper and SRC rankings)

- 10:30AM - 12:00PM (Noon): Plenary Session: "Programming Models" (Papers 7) Chair: Qing Yi

- Mint: Realizing CUDA performance in 3D Stencil Methods with Annotated C Didem Unat, Xing Cai and Scott B. Baden.

- MDR: Performance model driven runtime for heterogeneous parallel platforms Jacques Pienaar, Anand Raghunathan and Srimat Chakradhar.

- Active Pebbles: Parallel Programming for Data-Driven Applications Jeremiah Willcock, Torsten Hoefler, Nicholas Edmonds and Andrew Lumsdaine.

- 12:00PM - 1:30PM: Lunch, sponsored by Intel/AMD

- 1:30PM - 3:00PM: Parallel Session: "Accelerator-Based Mathematics" (Papers 8a) Chair: Jacqueline Chame

- Automating GPU Computing in MATLAB Chun-Yu Shei, Pushkar Ratnalikar and Arun Chauhan.

- Using GPU to Compute Large Out-of-card FFTs Liang Gu, Jakob Siegel and Xiaoming Li.

- Automatic SIMD Vectorization of Fast Fourier Transforms for the Larrabee and AVX Instruction Sets Daniel Mcfarlin, Volodymyr Arbatov, Franz Franchetti and Markus Puschel.

- 1:30AM - 3:00PM (Noon): Parallel Session: "Caching" (Papers 8b) Chair: Martin Schulz

- Cost-Effectively Offering Private Buffers from a Shared Cache Zhen Fang, Li Zhao, Ravi Iyer, Carlos Flores Fajardo, German Fabila Garcia, Seung Eun Lee, Steven King, Srihari Makineni, Xiaowei Jiang and Bin Li.

- A Composite and Scalable Cache Coherence Protocol for Large Scale CMPs Yi Xu, Yu Du, Youtao Zhang and Jun Yang.

- Controlling Cache Utilization of HPC Applications Swann Perarnau, Marc Tchiboukdjian and Guillaume Huard.

- 3:30PM - 5:00PM: Parallel Session: "Applications" (Papers 9a) Chair: Jeffrey K. Hollingsworth

- Cosmic Microwave Background Map-Making At The Petascale And Beyond Rajesh Sudarsan, Julian Borrill, Christopher Cantalupo, Theodore Kisner, Kamesh Madduri, Leonid Oliker, Horst Simon and Yili Zheng.

- A QHD-Capable Parallel H.264 Decoder Chi Ching Chi and Ben Juurlink.

- MP-PIPE: A Massively Parallel Protein-Protein Interaction Prediction Engine Andrew Schoenrock, Frank Dehne, James Green, Ashkan Golshani and Sylvain Pitre.

- 3:30PM - 5:00PM: Parallel Session: "Innovative Architecture Solutions" (Papers 9b) Chair: Daniele Scarpazza

- The Elephant and the Mice: Non-Strict Fine-Grain Synchronization for Many-Core Architectures Juergen Ributzka, Yuhei Hayashi, Joseph B. Manzano and Guang R. Gao.

- F^2BFLY: An On-Chip Free-Space Optical Network with Wavelength-Switching Jin Ouyang, Chuan Yang, Dimin Niu, Yuan Xie and Zhiwen Liu.

- Karma: Scalable Deterministic Record-Replay Arkaprava Basu, Jayaram Bobba and Mark D. Hill.

- Saturday, June 4

-

- 8:00AM - Noon:

-

- MPI/SMPSs (Coronado Room)

- CACHES (Salon E)

- WHIST (Salon D)

- 1:30PM - 5:00p:

-

- MPI/SMPSs (Coronado Room)

- Optimization and Tuning (Santa Rita Room)

- CACHES (Salon E)

- WHIST (Salon D)

Keynote Address I

Rethinking Shared-Memory Languages and Hardware

Sarita Adve

University of Illinois Urbana-Champaign

(Slides)

The era of parallel computing for the masses is here, but writing correct

parallel programs remains difficult. For many domains, shared-memory remains

an attractive programming model. The memory model, which specifies the meaning

of shared variables, is at the heart of this programming model. Unfortunately,

it has involved a tradeoff between programmability and performance, and has

arguably been one of the most challenging and contentious areas in both

hardware architecture and programming language specification. Recent broad

community-scale efforts have finally led to a convergence in this debate, with

popular languages such as Java and C++ and most hardware vendors publishing

compatible memory model specifications. Although this convergence is a

dramatic improvement, it has exposed fundamental shortcomings in current

popular languages and systems that thwart safe and efficient parallel

computing.

I will discuss the path to the above convergence, the hard lessons learned,

and their implications. A cornerstone of this convergence has been the view

that the memory model should be a contract between the programmer and the

system - if the programmer writes disciplined (data-race-free) programs, the

system will provide high programmability (sequential consistency) and

performance. I will discuss why this view is the best we can do with current

popular languages, and why it is inadequate moving forward, requiring

rethinking popular parallel languages and hardware. In particular, I will

argue that (1) parallel languages should not only promote high-level

disciplined models, but they should also enforce the discipline, and (2) for

scalable and efficient performance, hardware should be co-designed to take

advantage of and support such disciplined models. I will describe the

Deterministic Parallel Java language and DeNovo hardware projects at Illinois

as examples of such an approach.

This talk draws on collaborations with many colleagues over the last two

decades on memory models (in particular, a CACM'10 paper with Hans-J. Boehm)

and with faculty, researchers, and students from the DPJ and DeNovo projects.

Biography

Sarita Adve is Professor of Computer Science at the University of Illinois at

Urbana-Champaign. Her research interests are in computer architecture and

systems, parallel computing, and power and reliability-aware systems. Most

recently, she co-developed the memory models for the C++ and Java programming

languages based on her early work on data-race-free models, and co-invented

the concept of lifetime reliability aware processors and dynamic reliability

management. She was named an ACM fellow in 2010, received the ACM SIGARCH

Maurice Wilkes award in 2008, was named a University Scholar by the University

of Illinois in 2004, and received an Alfred P. Sloan Research Fellowship in

1998. She serves on the boards of the Computing Research Association and ACM

SIGARCH. She received the Ph.D. in Computer Science from Wisconsin in 1993.

Keynote Address II

Challenges and Opportunities in Renewable Energy and Energy Efficiency

Steve Hammond

National Renewable Energy Laboratory

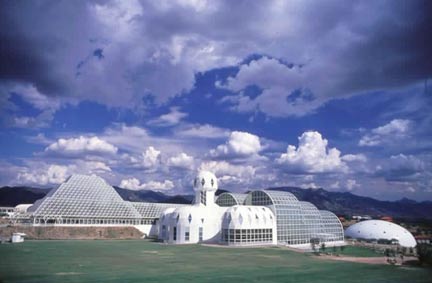

The National Renewable Energy Laboratory (NREL) in Golden, Colorado is the

nation's premier laboratory for renewable energy and energy efficiency

research. In this talk we will give a brief overview of NREL and then focus on

some of the challenges and opportunities in meeting future global energy

challenges. Computational modeling, high performance computing, data

management and visual informatics are playing a key roles in advancing our

fundamental understanding of processes and systems at temporal and spatial

scales that evade direct observation and in helping meet U.S. goals for energy

efficiency and clean energy production. This discussion will include details

of new, highly energy efficient buildings and social behaviors impacting

energy use, fundamental understanding of plants and proteins leading to lower

cost renewable fuels, novel computational chemistry approaches for low cost

photovoltaic materials, and computational fluid dynamics challenges in

simulating complex behaviors within and between large-scale deployment of wind

farms and understanding their potential impacts to local and regional climate.

Biography

Steve is the director of Computational Science at the National Renewable

Energy Laboratory (NREL) located in Golden, Colorado where he leads the

laboratory efforts in high performance computing and energy efficient data

centers. Prior to joining NREL in 2002, Steve spent ten years at the

National Center for Atmospheric Research in Boulder, Colorado leading

efforts to develop efficient massively parallel climate models.

Before NCAR, Steve did Post Doctoral work at the European Center for Advanced

Scientific Computing in Toulouse, France; was a Research Associate at the

Research Institute for Advanced Computer Science, NASA Ames Research Center,

Moffett Field, California; and a Computer Scientist at GE's Corporate Research

and Development Center, in Schenectady, New York.

Keynote Address III

Performance Modeling as the Key to Extreme Scale Computing

William D. Gropp

University of Illinois at Urbana-Champaign

Parallel computing is primarily about achieving greater performance

than is possible without using parallelism. Especially for the

high-end, where systems cost tens to hundreds of millions of dollars,

making the best use of these valuable and scarce systems is important.

Yet few applications really understand how well they are performing

with respect to the achievable performance on the system. The Blue

Waters system, currently being installed at the University of

Illinois, will offer sustained performance in excess of 1 PetaFLOPS

for many applications. However, achieving this level of performance

requires careful attention to many details, as this system has many

features that must be used to get the best performance. To address

this problem, the Blue Waters project is exploring the use of

performance models that provide enough information to guide the

development and tuning of applications, ranging from improving the

performance of small loops to identifying the need for new

algorithms. Using Blue Waters as an example of an extreme scale

system, this talk will describe some of the challenges faced by

applications at this scale, the role that performance modeling can

play in preparing applications for extreme scale, and some ways in

which performance modeling has guided performance enhancements for

those applications.